Logical Fallacies, Biases, and Useful Heuristics

January 11, 2021 • ☕️☕️ 10 min read

I had the chance to read some interesting books about human behavior over the last few years. I found many of the concepts, theories, and fallacies in these books a useful reference when dealing with others and good general heuristics when making decisions.

This is a growing list of collected notes I had initially for myself, but I guess it will also be useful for others!

Here is a list of the books in case anyone is interested in going deeper:

- Misbehaving: The Making of Behavioral Economics

- Loserthink: How Untrained Brains Are Ruining America

- Never Split the Difference

- Unconditional Parenting - You will be surprised, but dealing with most people is no different from raising kids.

- Influence: The Psychology of Persuasion.

- Predictably Irrational.

There are also some other books and resources mentioned here and there throughout the list.

There you go (the list is not in any particular order):

Hot hand fallacy:

In basketball, when a player scores multiple goals one after another, people assume what happens now will continue, and he will keep scoring.

You can see this prevalent in many other situations, e.g., the stock market and even programming. For example, Developers sometimes would assume that when a service is down, it is down because of a specific reason without investigating, just because that what happened the last few times the service was down.

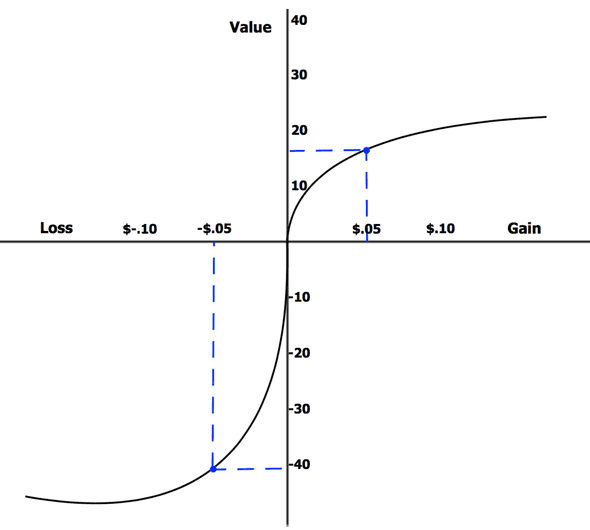

Loss aversion:

It is the tendency to prefer avoiding losses to acquiring equivalent gains. People feel more pain when they lose something they have compared to their pleasure when they earn the same value.

This is related to The Endowment effect (next point).

Endowment effect:

People are more likely to retain an object they own than acquire that same object when they do not own it. People tend to overvalue what they have.

For example, in the “Predictably irrational” book, the author mentioned an experiment they did on people who won tickets to a baseball game, the ones who owned the tickets were willing to sell them for an average of $2000 while the ones who didn’t win the tickets were at max willing to pay $300 or so. Even though those two groups of people were both eager to win the tickets, once a group of them owned the tickets, they started to value them way more than the others.

Another example from the software engineering world, you have ever seen people falling in love with “their” code? There you go!

This is also related to The IKEA effect.

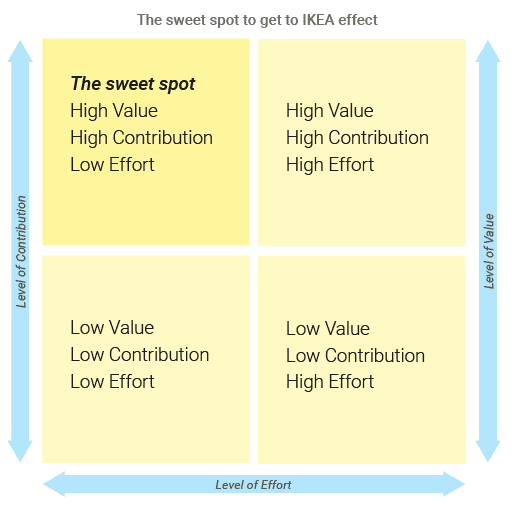

The IKEA effect:

It is a cognitive bias in which consumers place a disproportionately high value on products they partially created.

Cognitive dissonance

Rationalizing something you are doing which is against your beliefs.

For example, when you see your doctor smoking and ask him why and instead of admitting it is wrong, he explains why it is not and is useful for him somehow. It is always interesting to observe others having cognitive dissonance.

Another example of this from the software engineering world is: Have you ever been so critical to other people’s code when reviewing their PRs, then you find yourself making the same mistakes in your code and end up justifying it somehow!

The Stockdale Paradox

From the book “Good to Great.” A similar phenomenon was mentioned in “Man’s Search For Meaning” but not under the same name.

In short, Have a strong belief that you will eventually prevail, but also face your current realities.

I think that is crucial for anyone who is building a business. Sometimes, the company will be facing a crisis. If you don’t believe that you can go through it and emerge successful, you will get depressed and stop working. Sometimes people label that as persistence and just the ability to keep going through the obstacles without giving up.

But be aware of giving yourself fake hope by specifying dates where it will be over. In Viktor Frankl’s book, he mentioned that some prisoners would set dates to provide themselves with hope, e.g., I will be out by the new year and return to my family. When the date approaches and It doesn’t seem to be happening, those people lose hope and well to even live, and many of them would get very depressed and sick and die shortly after.

You can read more about The Stockdale Paradox here.

Survival bias

When we concentrate on the sample or things that made it through some selection process and overlook those who did not.

For example, You shouldn’t complete your college degree because many successful startup founders don’t have a college degree! But maybe you think that only because you are looking at the sample that survived and were able to be successful, but what about the ten times more founders who failed and you never heard of?

Confirmation bias

One of the most common, and I personally caught myself having it many times.

When you have any specific belief or opinion about something, you tend to interpret and pick the information that confirms or supports that belief.

For example, Suppose I started believing that some programming language is terrible. In that case, anything happens that suggests that it might not be following a best practice, copying another language, or needs a bigger learning curve, etc. I will always ignore any benefits of it and always only pick what supports my opinion, confirming it and making it stronger.

Parkinson’s law:

Oh boy! that is the most common I’ve seen in software projects! I heard about it initially from the fantastic book “The Making of a Manager.”

It states that “work expands to fill the time available for its completion.”

For example, How many times did you start a new project and gave yourself ample time to finish it, and ended up delivering past the deadline or scrambling during the last week of the project. (been there, done that!)

How to avoid it? Break big projects/tasks into smaller chunks, follow up, and reprioritize throughout the project from the early days. Knowing the impact of skipping 1-day work in a three-month project will push you to estimate things better and not waste time.

Occam’s razor:

When one is faced with two possible explanations, the simplest one is usually the right one.

Also related: KISS (Keep It Simple Stupid)

KISS (Keep It Simple Stupid):

States that most systems work best if they are kept simple rather than made complicated.

So the next time you see that overly complicated and abstracted Pull Request which tries to handle imaginary future use cases, you KISS the developer who wrote it!

Combinatorial explosion:

In mathematics, a combinatorial explosion is the rapid growth of the complexity of a problem. The number of combinations of the problem is affected by the problem’s input, constraints, and bounds.

For example, suppose you are building a text input component with a status state that can be ENABLED and DISABLED. In that case, it is simple, but then add another State to detect if the text is VALID or INVALID. Suddenly, with only two states, you have four combinations ENABLED-VALID, ENABLED-INVALID, DISABLED-VALID, and DISABLED-INVALID. You already can see what’s happening here. The number of possible combinations doesn’t grow linearly but exponentially—the number of combinations = 2^number of states with more inputs.

Being aware of all these different combinations while building a software system makes it easier to avoid bugs early on. Most bugs will happen due to a combination of states that you didn’t handle. State Machines/Charts happen to be one of the best approaches to address this.

Functional fixedness:

It is a cognitive bias that limits a person to use an object only in the way it is traditionally used.

I think another concept related to this is what I read in this book, “The Inner Game of Tennis,” talking about how the existence of best practices causes new joiners to the game to emulate those practices without trying first what is best for them.

Matthew effect:

Matthew effect of accumulated advantage can be summarized as: “the rich get richer, and the poor get poorer.”

I’ve seen a result of this effect in some software teams where a manager would unintentionally keep a developer working on legacy projects for a long time where they fall behind technically. And then, because they start to be less technically competent, the manager will keep them working on the same type of legacy smaller projects, which will make the problem worse.

Team Leads need to be aware of this and diversify knowledge and projects in teams to ensure everyone is improving and leveling up.

The illusion of explanatory depth:

The incorrectly held belief that one understands the world on a deeper level than one does.

No True Scotsman/Appeal To Purity:

It is a fallacy in which one attempts to protect a universal generalization from counterexamples by changing the definition in an ad hoc fashion to exclude the counterexample.

Consider this sentence “All Frontend developers have to learn to React.” Someone would say, I’m a FE developer, and I don’t use React, then the person would say no “good” FE developer wouldn’t learn React.

Hero syndrome:

The hero syndrome is a phenomenon affecting people who seek heroism or recognition, usually by creating a situation that they can resolve.

People with hero syndrome generally cause an accident or disaster to come then to render aid and become the ‘hero.’

Not invented here Syndrome:

oh, this one is also one of the most common in the software engineering world

It is the tendency to avoid using or buying products, research, or knowledge from external origins. People would have a strong bias against ideas from the outside.

NIH is often the result of pride that makes an organization believe that they can solve a problem better than pre-existing solutions already do.

There are plenty of examples of that in the software engineering world, where teams would go and spend months building tools in-house just because the open-source or even other paid products are “Not Invented Here,” so they got to have a lower quality.

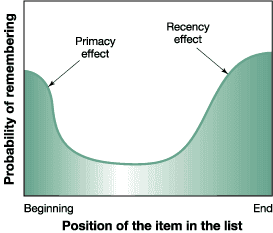

The Recency Effect (related: Serial-position effect, Primacy effect)

- The Recency Effect/The Primacy Effect is the tendency to remember the most recently presented information best.

- The Serial-Position Effect is the tendency of a person to recall the first and last items in a series best and the middle items worst.

Sunk Cost Fallacy:

This is one of the critical fallacies to spot and cut your losses early. It is the idea that we sometimes continue to invest (effort, money, etc.) in specific endeavors just out of fear of losing what we have invested in it so far.

For example, you could start working on a side project. After some time, it is clear that you are not solving a real problem and the project is not moving in the right direction, but you continue anyway because all the time and effort you spent on it would be for nothing!

To avoid this one, you need discipline and tracking some success metrics which, if you don’t meet, you force yourself to shut down or stop the behavior you are invested in.

The Opportunity Cost:

It is the forgone benefit that would have been derived by an option not chosen. Investopedia definition

For example, You decide to take a full-time Masters for the next two years. Someone would argue that you will lose the opportunities of having an actual full-time job and work experience, or maybe starting your own company and getting experience in that, etc.

But beware of crying about lost opportunities in retrospect. That will get you depressed instead of focusing on future opportunities.

The Compound Effect:

Understanding and practicing Compounding is one of the most valuable behaviors you can acquire in your life. It is the idea that small insignificant actions done continuously over time lead to huge rewards in the future.

Keep in mind, to make compounding work, you have to make the action insignificant enough so it can be easy to do it continuously. You shouldn’t stop for no real reason. Or as Charlie Munger puts it: “The first rule of compounding: Never interrupt it unnecessarily.”

If you want to read more about compounding specifically in personal finance, read The Psychology of Money. And about compounding in general, I highly recommend reading The Compound Effect.

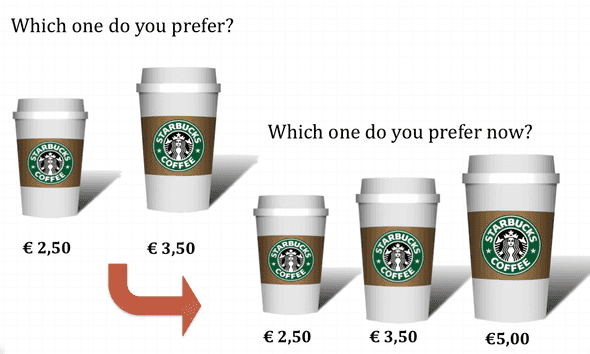

The Decoy Effect:

It is usually used in marketing, where you will be offered two options to choose from and a third option to push you towards buying the one more profitable for the seller.

For example:

Arbitrary Coherence Effect:

It is the idea that people start making consistent decisions based on an initial arbitrary random datapoint. So you start with an arbitrary datapoint, which impacts your behavior into a coherent pattern of decisions.

For example, If you are moving to a new city and trying to rent a house, the first price you see on the market will impact your purchase decisions and your willingness to pay.

Another example, they did an experiment where they asked college students to bid on an item. First, they have to write the last two numbers of their social security number in the paper of the bidding and the bidding amount. The result was that people with higher two numbers were bidding higher prices and vice versa!

The Influence of Arousal:

In the “Predictably Irrational” book, the author talks about how people in an arousal state can make terrible decisions. He describes it like if you have two personalities, your sensible normal one and another impulsive, unpredictable one.

In the book, the author mentions an example of how some people put their credit cards in an ice cube in the fridge, so when they want to use it to buy the next big online offer, they are forced to wait until that arousal period is over and they are back to their normal selves.

I found that similar to a piece of advice mentioned in the “Managing Humans” book, where the author talked about the ability of “Soaking the punches,” which I found excellent advice to practice in life. For example, when you get an email and want to reply right away to defend some point or prove someone wrong. Instead, take it and wait till maybe the next day or so, and mostly you won’t even send the email because you will realize it was a wrong decision your normal self wouldn’t make.

Analysis paralysis

Analysis paralysis describes an individual or group process when overanalyzing or overthinking a situation can cause forward motion or decision-making to become “paralyzed,” meaning that no solution or course of action is decided upon. Wikipedia definition

This one is prevalent in software engineering and product teams. People sometimes can spend so much time trying to overanalyze the data or the best approach to build a system, where planning itself might take too long, and they end up abandoning the project altogether.

Thanks for reading, This list is a living document that I will keep updating with new useful concepts as I learn about them, so keep coming back!